Dual kernel architectures have played a crucial role in enabling low-latency real-time capabilities on Linux® for nearly three decades. Despite having been extensively documented and discussed in academia and despite their successful implementation in embedded and industrial solutions, they have faced skepticism within the kernel community.

In today's computing landscape, where processors often run virtual machines, hypervisors, and trusted execution environments alongside the Linux kernel, we should ask ourselves why no dual kernel framework has been merged upstream.

Configuring and working with a fully preemptible real-time kernel can be frustrating and time-consuming. There are many blogs that can attest to that. The release cycles are long, with complex back-ports and challenging software updates.

As a software architect, and depending on the use case, it's important to consider alternatives. Do you really need a fully preemptible kernel to run real-time applications? Or could a real-time co-kernel provide the necessary latencies and determinism for those video, audio, or acquisition pipelines—while still allowing you to work on the latest kernel version and receive regular security updates?

Enter Xenomai4, the evolution of the dual kernel architecture.

The Dual Kernel Evolution: Xenomai4

Over the years, the contributions made by PREEMPT_RT to the Linux kernel have been nothing short of monumental to the point that we now have an almost fully preemptible kernel with acceptable interrupt and scheduling latencies.

But that should not preclude the fact that sometimes, a dual kernel architecture is just the better choice.

Broadly speaking a dual kernel architecture introduces a separate real-time core alongside the Linux kernel that is connected to the hardware via a a high priority execution stage. The real-time enabler therefore consists on:

- a high priority execution stage that will service every interrupt.

- a real-time core.

- a user library that enables invocation of the real-time core services.

The separate real-time core in a dual kernel architecture can be developed and maintained independently of the Linux kernel.

Real-time applications rely on this core for their scheduling and latency requirements while the rest of the system continues to execute, unaware of its existence. This way of partitioning the system leads to easier maintenance and faster bug fixes for real-time issues.

In many of the embedded real-world use cases, systems don't need the whole kernel logic to ensure that a web browser can not affect the response time of a low-latency application. Developers would rather not think that by running Linux, they have to guarantee system wide priority inheritance for core locks shared by all applications.

A dual kernel architecture provides a clean separation that addresses this concern; real-time tasks are handled by the real-time core and are not affected by the non-realtime system.

The Xenomai4 architecture, configuration, and limitations have been meticulously described in the Xenomai4 documentation page. Please do use it for reference, as it is totally worth your time.

What sets Xenomai4 apart from its predecessor, Xenomai3, is the complete redesign of the high-priority execution stage. This was done for portability and maintainability: I-pipe—the second iteration of the initial Adeos interrupt pipeline—has been fully replaced by Dovetail.

Any concerns or even distrust towards Xenomai within the kernel community may have stemmed from uncertainties about the long-term maintainability of its interrupt pipeline. However, with the introduction of Dovetail, these concerns have been addressed. Unlike PREEMPT_RT, which is typically available only in long-term support (LTS) kernels, Xenomai4 tracks the latest kernel versions.

Furthermore, projects like Pipewire, the leading multimedia framework, have already incorporated configurable support for Xenomai4—check the EVL meson option—highlighting it compatibility and applicability in real-world scenarios.

Xenomai4 on the Linux microPlatform

With the release of the Linux microPlatform (LmP) v91, Foundries.io™ now offers the option to configure real-time Linux support on the iMX8 platform using Xenomai4.

Even though this feature is being announced as experimental, don't let this tag misled you in terms of its performance: it only means that since we have no customers for it -as opposed to PREEMPT_RT or Jailhouse- we can not provide dedicated levels of support.

The web is replete with examples and resources on utilizing Xenomai, making it an ideal tool for learning about real-time Linux. One such example is an entry I wrote years ago for an ELC demo in San Diego. I showed Android using Xenomai to generate the PWM signal required to control servo motors that adjusted the pan and tilt angles of a camera stand. The demo code is still available in the Xenomai3 tree (you can check the Xenomai pull request here and a companion utility to control the motors using the arrow keys on any keyboard here).

For those engaged in activities such as student projects, maker initiatives, research, or prototyping, the well-documented nature of Xenomai4 makes it an excellent choice for learning real-time Linux. This is where instrumenting the real-time core or the interrupt pipeline becomes a necessity.

This post aims to provide guidance on platform development using the Linux microPlatform and Xenomai4, including booting an LmP Xenomai4 release on the iMX8mm LPDDR4 board and facilitating efficient rebuilding, modification, and updating of the Xenomai4-enabled kernel and its associated library, libevl.

Building the Linux microPlatform for Platform Development

For early platform development, you probably don't need access to all the features offered by FoundriesFactory®. Instead, you can just follow the README.md from our lmp-manifest.

We start by syncing the repository:

repo init -u https://github.com/foundriesio/lmp-manifest -b refs/tags/91

repo sync

We then build for the platform using the lmp-base distro.

Selecting this distro overrides some configurations from LmP so that we can generate a friendly system for development. This means that:

- It does not support OSTree.

- The rootfs is read-writable (as Poky’s default), and as a consequence, OTA is disabled.

- The image architecture is different, there are two directories: boot and root, for the boot files and the rootfs, respectively.

- The U-Boot configuration is defined apart from LmP.

- The U-Boot scripts are provided as a package and can be easily changed.

- The Linux Kernel binary, along with the required DTB files, are provided as separate files, instead of inside a boot image. This way the binaries can be replaced for testing purposes.

To setup that environment do:

DISTRO=lmp-base MACHINE=imx8mm-lpddr4-evk source setup-environment

We should now select Xenomai support on the build. To do that, edit the configuration file conf/local.conf and modify the preferred kernel provider and enable the Xenomai4 feature. You will also have to accept the Freescale EULA:

PREFERRED_PROVIDER_virtual/kernel:mx8mm-nxp-bsp = "linux-lmp-fslc-imx-xeno4"

MACHINE_FEATURES:append = " xeno4"

ACCEPT_FSL_EULA = "1"

⚠️ NOTE: To get access to the kernel tree and Xenomai4 sources after we have built the images, we also edit conf/auto.conf and remove the INHERIT += "rm_work" line. This will be useful if you need to rebuilt the kernel or modify its default configuration options.

Then issue the build command

bitbake lmp-base-console-image

When the build is done, all the artifacts will be located in build-lmp-base/deploy/images/imx8mm-lpddr4-evk.

The kernel tree—in case you wish to clone it locally for development outside of bitbake—is in build-lmp-base/tmp-lmp_base/work-shared/imx8mm-lpddr4-evk/kernel-source and the kernel configuration in build-lmp-base/tmp-lmp_base/work-shared/imx8mm-lpddr4-evk/kernel-build-artifacts/.config

Flashing the Lmp-Base Distribution

Flashing to the eMMC in the i.MX 8M Mini Evaluation Kit requires using MFGTools from NXP®. These tools could be pulled from one of our recent CI releases.

Download the file mfgtool-files-imx8mm-lpddr4-evk.tar.gz and unpack it into an installer directory:

tar -zxvf mfgtool-files-imx8mm-lpddr4-evk.tar.gz -C installer

Then edit mfgtool-files-imx8mm-lpddr4-evk/full_image.uuu and remove the line FB: flash sit ../sit-imx8mm-lpddr4-evk.bin

You should have something like this:

$ cat installer/mfgtool-files-imx8mm-lpddr4-evk/full_image.uuu

uuu_version 1.2.39

SDP: boot -f imx-boot-mfgtool

SDPV: delay 1000

SDPV: write -f u-boot-mfgtool.itb

SDPV: jump

FB: ucmd setenv fastboot_dev mmc

FB: ucmd setenv mmcdev ${emmc_dev}

FB: ucmd mmc dev ${emmc_dev} 1; mmc erase 0 0x2000

FB: flash -raw2sparse all ../lmp-base-console-image-imx8mm-lpddr4-evk.wic

FB: flash bootloader ../imx-boot-imx8mm-lpddr4-evk

FB: flash bootloader2 ../u-boot-imx8mm-lpddr4-evk.itb

FB: flash bootloader_s ../imx-boot-imx8mm-lpddr4-evk

FB: flash bootloader2_s ../u-boot-imx8mm-lpddr4-evk.itb

FB: ucmd if env exists emmc_ack; then ; else setenv emmc_ack 0; fi;

FB: ucmd mmc partconf ${emmc_dev} ${emmc_ack} 1 0

FB: done

At this point, you can either copy or create symbolic links for the previously built images at the base of the installer directory:

u-boot-imx8mm-lpddr4-evk.itb,lmp-base-console-image-imx8mm-lpddr4-evk.wicimx-boot-imx8mm-lpddr4-evk

The installer directory will look like this:

├── imx-boot-imx8mm-lpddr4-evk

├── lmp-base-console-image-imx8mm-lpddr4-evk.wic

├── u-boot-imx8mm-lpddr4-evk.itb

└── mfgtool-files-imx8mm-lpddr4-evk

├── bootloader.uuu

├── fitImage-imx8mm-lpddr4-evk-mfgtool

├── full_image.uuu

├── imx-boot-mfgtool

├── u-boot-mfgtool.itb

├── uuu

├── uuu.exe

└── uuu_mac

Now you are finally ready to flash to eMMC. Please follow the instructions to configure the switches and issue the flash command for your platform as described on our hardware preparation page.

Replacing the Kernel, Drivers, and Xenomai4 Libraries

Booting the system to the shell with the user fio and password fio shows

Linux-microPlatform Base (no ostree) 4.0.9 imx8mm-lpddr4-evk

imx8mm-lpddr4-evk login: fio

Password: fio

fio@imx8mm-lpddr4-evk:~$ evl -v

evl.0.44 -- #11022a7 (2023-05-21 15:21:54 +0200) [requires ABI 32]

Now is a good time to calibrate and test your system.

To replace the Linux kernel, drivers, and Xenomai libraries you could use bitbake to regenerate the wic image, and then flash it all again.

However a better option available to us, since we are using lmp-base, would be to use U-Boot's USB mass storage class (UMS).

For this you stop the boot sequence at the U-boot prompt, and expose the eMMC block device to the USB host:

u-boot=> ums 0 mmc 2

UMS: LUN 0, dev mmc 2, hwpart 0, sector 0x0, count 0x1d5a000

On your host, you will now have a couple of mount points: boot and root. You should have write access to both.

Lets assume that /media/user/boot and /media/user/root are the mount points.

Re-building and replacing the Xenomai4 library and tests is as painless as described in this script:

#!/bin/bash

set -e

cd .build/libevl

meson setup --cross-file /home/user/libevl/meson/aarch64-none-linux-gnu \

-Dbuildtype=release \

-Dprefix=/usr \

-Duapi=/home/user/linux-evl \

. /home/user/libevl

meson compile

read -p "Press to install in /media/user/root"

DESTDIR=/media/user/root meson install

sudo sync

umount /media/user/boot

umount /media/user/root

Re-building and replacing the Xenomai4 enabled kernel is as equally painless:

#!/bin/bash

set -e

TREE=/home/user/linux-evl

cd $TREE

## The defconfig can be generated from from the Yocto layer

ARCH=arm64 CROSS_COMPILE="ccache aarch64-none-linux-gnu-" make O=build-aarch64 xenomai_defconfig

ARCH=arm64 CROSS_COMPILE="ccache aarch64-none-linux-gnu-" make O=build-aarch64 menuconfig

ARCH=arm64 CROSS_COMPILE="ccache aarch64-none-linux-gnu-" make -k O=build-aarch64 -j`nproc` Image

ARCH=arm64 CROSS_COMPILE="ccache aarch64-none-linux-gnu-" make -k O=build-aarch64 -j`nproc` freescale/imx8mm-evk-qca-wifi.dtb

ARCH=arm64 CROSS_COMPILE="ccache aarch64-none-linux-gnu-" make -k O=build-aarch64 -j`nproc` modules

ARCH=arm64 CROSS_COMPILE="ccache aarch64-none-linux-gnu-" make -s O=build-aarch64 -j`nproc` modules_install INSTALL_MOD_PATH=MODULES INSTALL_MOD_STRIP=1

mkdir RELEASE

(cd build-aarch64/MODULES ; cp -Rf lib/* ../../RELEASE/ ; cd ../../RELEASE; cp ../build-aarch64/arch/arm64/boot/Image . ; cp ../build-aarch64/arch/arm64/boot/dts/freescale/imx8mm-evk-qca-wifi.dtb . ; cp ../build-aarch64/arch/arm64/boot/dts/freescale/imx8mm-evk.dtb .)

read -p "Press to install in /media/user/boot and /media/user/root"

cd $TREE/RELEASE

cp Image /media/user/boot

cp imx8mm-evk-qca-wifi.dtb /media/user/boot/

cp -Rf modules/* /media/user/root/lib/modules/

sync

umount /media/user/boot

umount /media/user/root

After either of the above scripts you can now stop UMS on the U-boot shell and type boot to continue the execution.

It is that simple.

What to Expect on the iMX8mm-EVK Development Board

The Linux microPlatform provides support for containers, making it noteworthy that Xenomai4 applications can be containerized while preserving their real-time capabilities. It is important to note that these containers need to run in privileged mode, which comes with certain implications, including the inherent security risks.

On this particular platform, there is a significant improvement to be had by enabling CPU isolation. What this boot argument does is to remove the specified CPUs from the scheduling domain while allowing to isolate processes from the selected CPUs by default.

Enabling CPU isolation and pinning the latency tester to a core -the standard PREEMPT_RT way of operating and providing metrics- gives reasonable figures in the range of 70 usecs.

Below the latency test results executing while stress-ng --cpu 4 --vm 2 --hdd 1 --fork 8 runs to simulate CPU load.

RTT| 10:21:22 (user, 1000 us period, priority 98, CPU1)

RTH|----lat min|----lat avg|----lat max|-overrun|---msw|---lat best|--lat worst

RTD| 2.406| 2.725| 7.503| 0| 0| 2.390| 62.448

RTD| 2.399| 2.749| 8.781| 0| 0| 2.390| 62.448

RTD| 2.430| 2.737| 8.819| 0| 0| 2.390| 62.448

RTD| 2.406| 2.735| 9.138| 0| 0| 2.390| 62.448

RTD| 2.403| 7.256| 26.268| 0| 0| 2.390| 62.448

RTD| 3.546| 13.228| 28.719| 0| 0| 2.390| 62.448

RTD| 4.023| 11.837| 29.460| 0| 0| 2.390| 62.448

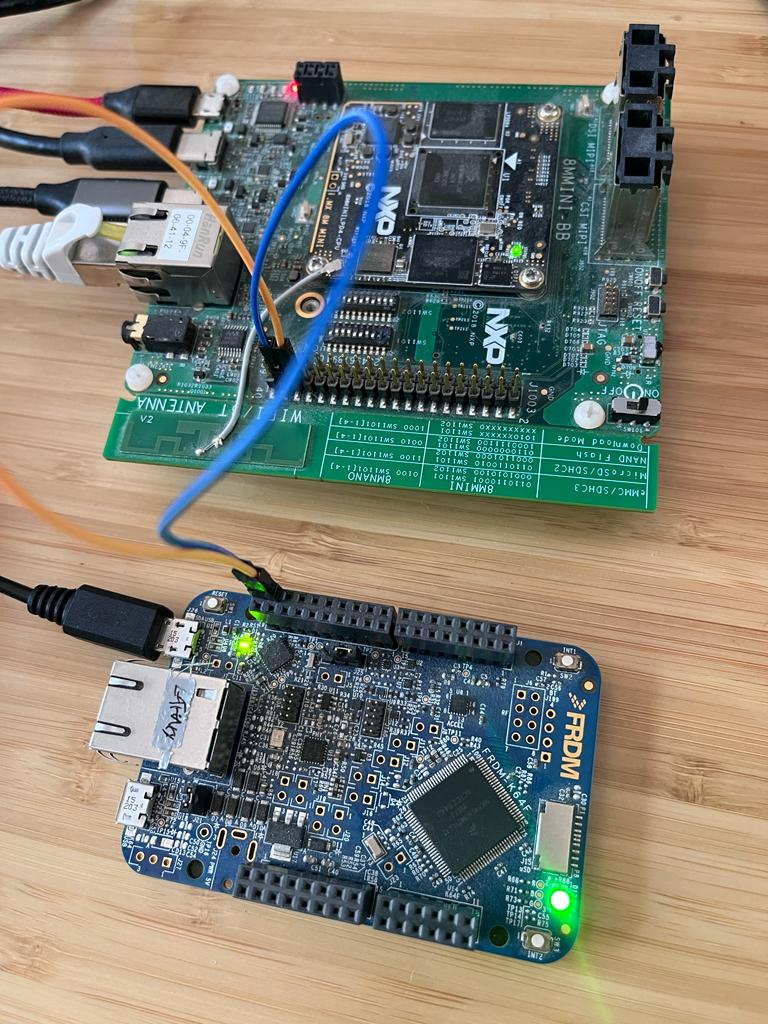

You can achieve similar results by utilizing instrumentation tools through GPIO events. One approach is to have a remote board equipped with a real-time operating system (RTOS) send a signal to the target board and measure the response time. In a Linux environment, you can create a straightforward real-time application that listens for GPIO pin events and reacts to the interrupt by toggling another GPIO. This methodology allows for comparative analysis and obtaining relevant figures.

Please do check the bench-marking page in the Xenomai4 documentation site.

If you decide to try the instrumentation you could hook your wires to J1003 pins 38 and 36:

Then apply the following functionality to the kernel device tree (you will have to disable SAI5_RXD0 and SAI5_RXD2 if they were in use already)

&iomuxc {

pinctrl-0 = <&pinctrl_hog>;

pinctrl_hog: hoggrp {

fsl,pins = <

MX8MM_IOMUXC_SAI5_RXD2_GPIO3_IO23 0x19

MX8MM_IOMUXC_SAI5_RXD0_GPIO3_IO21 0x19

>;

};

};

Not enabling CPU isolation makes this platform a bit less attractive with latencies going up substantially. This is consistent with results measured in other boards using the iMX8M Mini.

This is a trace captured with no isolation.

RTT| 10:00:22 (user, 1000 us period, priority 98, CPU1-noisol)

RTH|----lat min|----lat avg|----lat max|-overrun|---msw|---lat best|--lat worst

RTD| 80.934| 91.452| 125.810| 0| 0| 2.382| 126.987

RTD| 84.503| 89.313| 108.758| 0| 0| 2.382| 126.987

RTD| 87.076| 90.356| 108.393| 0| 0| 2.382| 126.987

RTD| 85.302| 91.018| 110.702| 0| 0| 2.382| 126.987

RTD| 86.036| 92.085| 110.385| 0| 0| 2.382| 126.987

RTD| 85.520| 92.058| 124.744| 0| 0| 2.382| 126.987

RTD| 84.825| 90.972| 116.370| 0| 0| 2.382| 126.987

RTD| 41.057| 93.812| 114.023| 0| 0| 2.382| 126.987

RTD| 40.609| 95.256| 138.789| 0| 0| 2.382| 138.789

RTD| 38.352| 93.473| 130.789| 0| 0| 2.382| 138.789

RTD| 41.262| 93.551| 112.122| 0| 0| 2.382| 138.789

RTD| 40.628| 94.218| 111.344| 0| 0| 2.382| 138.789

RTD| 49.176| 97.133| 132.460| 0| 0| 2.382| 138.789

Identifying the exact cause of this 2x loss in performance can be challenging without engaging the silicon vendor. It is possible that the issue relates to internal L2 cache maintenance operations that occur after adjusting the page table entries, leading to delayed interrupts even when the CPU has not explicitly masked them. However, confirming this hypothesis is difficult at this stage.

If the current GPIO bench-marking code in Xenomai4 seems a bit too sophisticated -it involves some networking, gnuplout and such- you could do your own instrumentation test.

As a matter of fact, what is in the Xenomai4 tree was based on something I wrote for my own consumption: the echo test.

The Zephyr code I did for that was equally trivial. This was originally written for the NXP FRDM-K64F but it will run on any Zephyr supported board.

The code can be executed with or without invoking the Xenomai4 real-time core. If the PREEMPT_RT feature is available, real-time requests will be handled by the preemptible kernel, enabling you to benchmark its performance as well. This flexibility allows you to explore different execution options and assess the impact of utilizing the real-time capabilities provided by Xenomai4 or relying on the preemptible kernel.

When comparing the co-kernel to a Linux preemptible kernel, it is important to consider that PREEMPT_RT implies a fundamentally different use case, making a direct comparison unfair. This is due to the distinct nature of their performance paths: while co-kernels execute a well-defined real-time path, a fully preemptible kernel like PREEMPT_RT is subjected to latencies at each layer and driver. Consequently, tuning PREEMPT_RT becomes a necessity rather than an optional measure to ensure optimal performance.

In summary, the decision between a fully preemptible Linux kernel and a co-kernel involves weighing different trade-offs. General-purpose operating systems, such as Linux, are designed to support a diverse range of use cases, resulting in complexity when making decisions. Consequently, it is important to acknowledge that no single solution can perfectly and optimally address all possible scenarios.